My AWS Account Got Hacked - Here Is What Happened

I want to share a recent attack on my personal AWS account - what happened, how the attacker operated, what I learned, and what could have been done better.

As someone who used to hunt vulnerabilities professionally (and still does it recreationally), and as a cloud architect, I know how this stuff works. So when it happened to me, it was a wake-up call - not because I made a silly mistake, but because it showed how real and targeted these attacks can be.

We often tell ourselves:

“It won’t happen to me.”

“I’m not that interesting.”

“What could anyone even do with my account?”

But it can happen. And it will happen - eventually.

Security isn’t something that magically happens after the fact. It has to be by design. Especially if you’re freelancing or experimenting on your own cloud - this time, it’s your pocket on the line, not a big company’s budget write-off.

I was lucky: I detected the attack fast, reacted immediately, and contained it. Still - it was stressful, messy, and humbling.

This incident really made me think — and only grew my appetite to tackle the hard problems the security industry (still) faces.

In the next sections, I’ll walk you through:

- The attack from my point of view - what I saw, what I thought, what I did.

- The attacker’s likely flow and intent (as reconstructed from the traces).

- And finally, what I did next to trace them back and strengthen my defenses.

2-3 hours before realizing

We had just wrapped up our daily scrum. The management team stayed on for a quick chat about next-quarter goals.

And then it started.

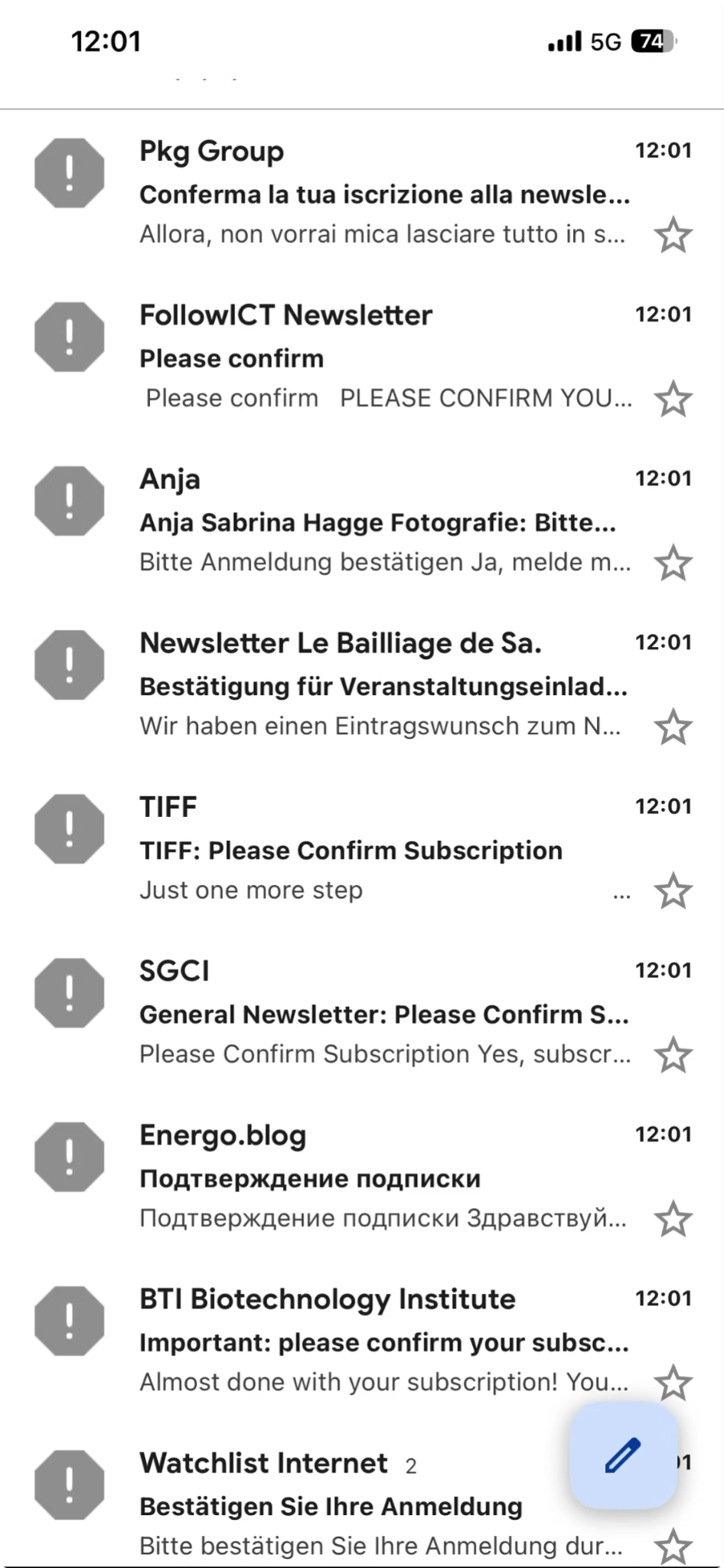

My inbox began to explode with spam. Not the usual junk - this was a flood: newsletters in random languages, sign-ups for services I’d never heard of, confirmation emails from dozens of companies.

Within an hour, I had over 70 new emails, not counting what my spam filters had already caught.

At first, I thought, That’s odd. I skimmed through them absentmindedly while staying in the conversation. Luckily, I didn’t just bulk-delete everything - something felt off. So I opened each one, checking the sender names and subjects, trying to make sense of it.

I kept asking myself:

What’s the goal here?

Is someone pranking me?

Why would anyone do this?

It didn’t make sense yet. But it would - a few hours later, when I connected the dots.

1 hour before realizing

The spam flood didn’t stop. Every few minutes, another dozen emails - confirmations, newsletters, random subscriptions in every possible language.

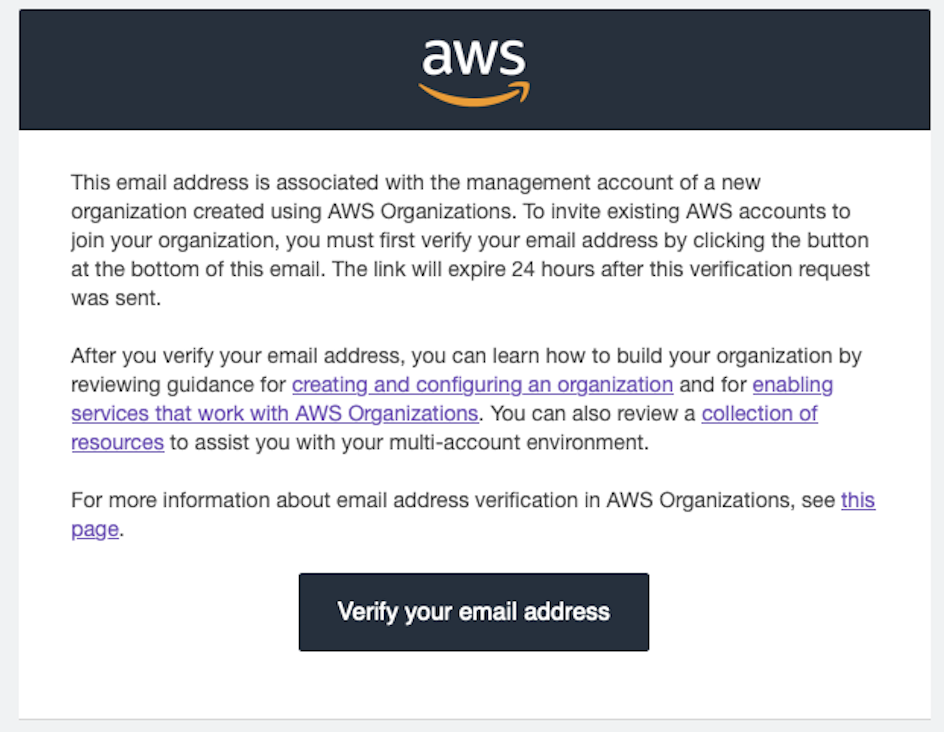

Then, buried in the noise, one message caught my eye:

AWS emails me all the time - invoices, alerts, confirmations. But this one felt… odd.

“Wait, I didn’t create an Organization. Or… did I?”

It’s important to know that around that time, I was actively building a website (shhh). I’d been deep in AWS for days - creating resources, testing configurations, deploying updates. So an email like that didn’t seem out of place.

My brain went: It’s probably something I triggered earlier. I’ll check it later. That’s fine.

You know what? Let’s make sure. I even asked ChatGPT, “This looks fine, right?” He also said, “That’s fine!”

So, I clicked the link. 🫣

(Why did the attacker do it? Patience - I’ll explain soon.)

Realizing!

A few more hours passed. The spam storm slowed down but didn’t stop - a steady drizzle of strange subscriptions still hitting my inbox.

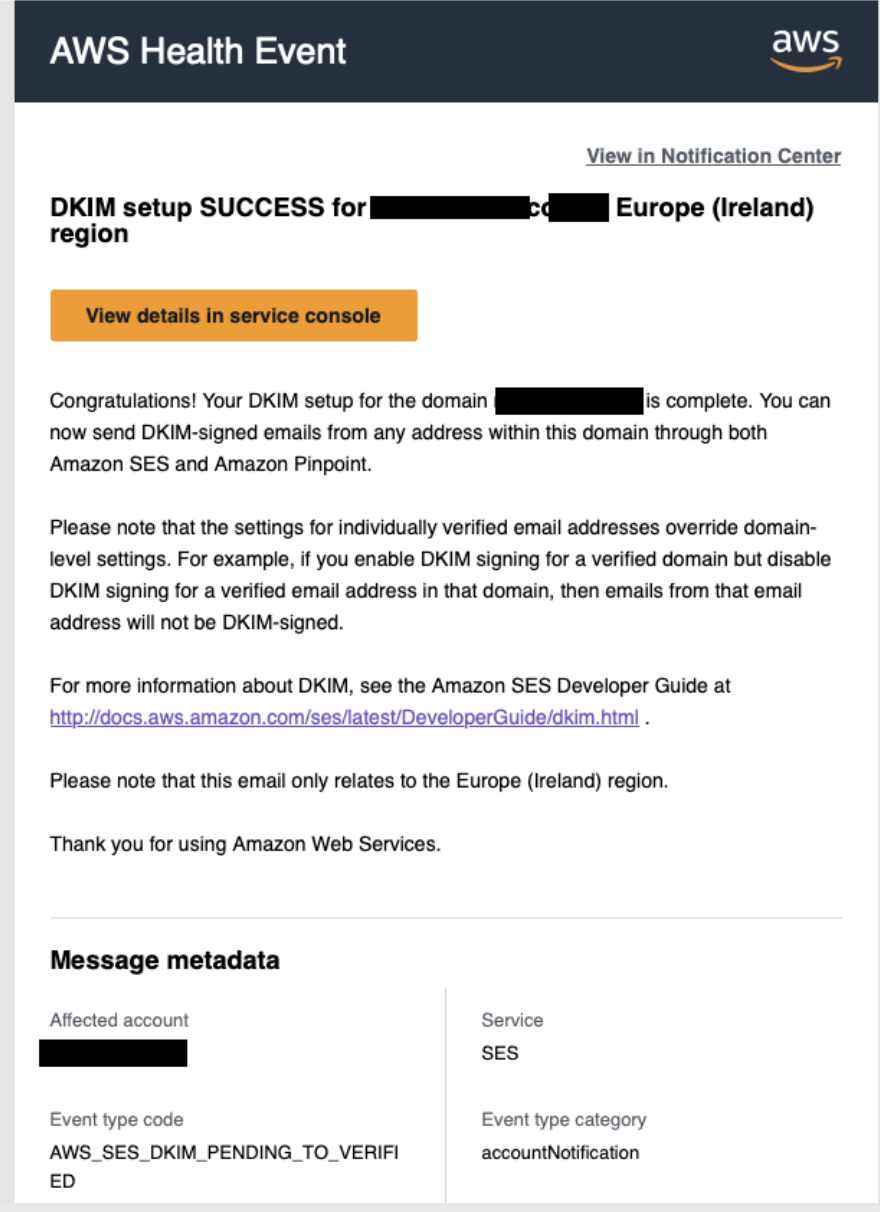

And then, this email arrived:

I froze. “This is not a coincidence anymore,” I thought.

I switched into under-attack mode.

Of course, before panicking, I did what every modern tech person does - I asked ChatGPT. And it confidently replied: “That’s normal! Don’t worry - AWS rotates DKIM codes sometimes.” So I almost relaxed.

But then I saw something odd:

AWS_SES_DKIM_PENDING_TO_VERIFY.

“Pending to verify?” Why pending? I knew I hadn’t created or changed anything related to SES.

That’s when it clicked - this wasn’t me.

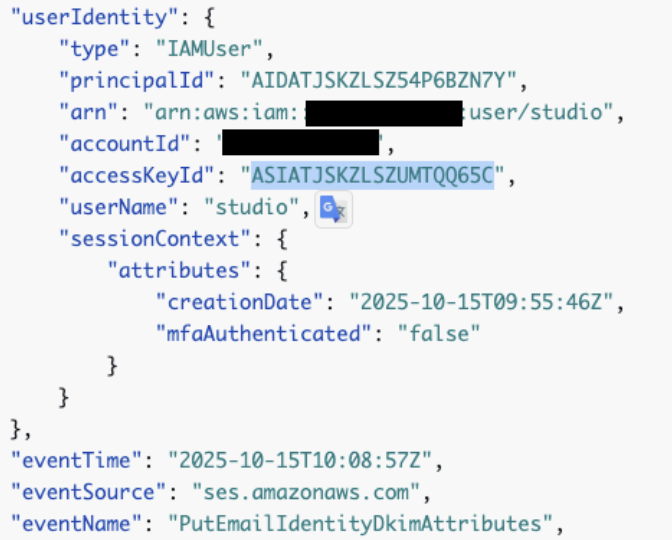

I opened CloudTrail and filtered events in the Ireland region. There it was: the DKIM setup event, created by an IAM user named studio.

I never created such a user.

That was the moment I knew for sure - I was under ATTACK.

(And yes - I’ll explain later what is SES and why the attacker did all this.)

First 1-2 hours - understanding the severity

At this point, one thing was clear: it didn’t matter how the attacker got in, or who they were, or what they wanted.

First priority: contain the situation and secure my account.

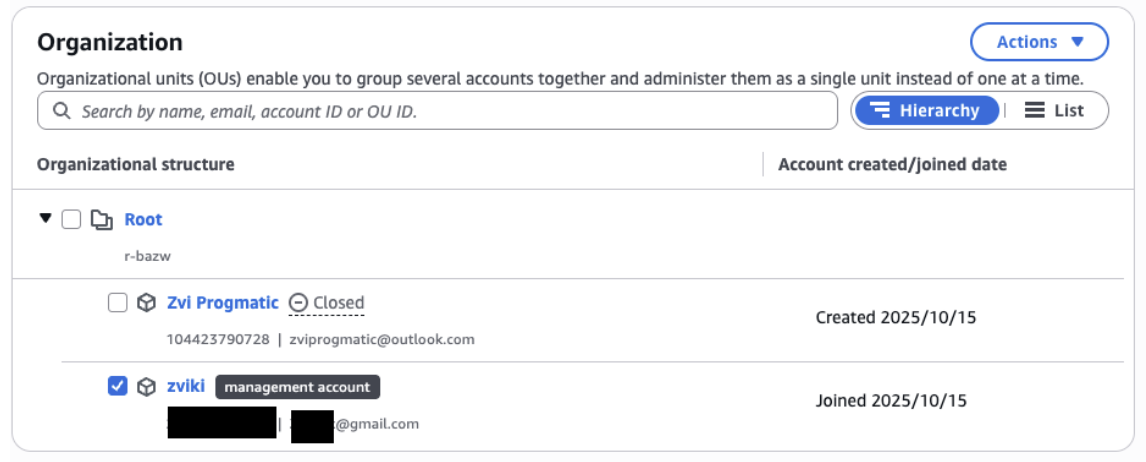

I quickly realized the attacker had touched my AWS Organization. I went straight to Account Management and Organization Settings.

- My email was still the management account - I could log in via Cognito, MFA and all.

- I immediately reset my password.

Play close attention:

- The management account is my account! We can breathe. This means that the Organisation belongs to my account, the attacker didn’t overtake the Organization

- The dates are literally “now” - it happened now, not by me.

- The dummy account is not mine, the email is not mine and it’s already closed - which means that this just “dummy account”

Next step - remove attacker access.

I opened the IAM dashboard: four new IAM users had been created.

I deleted all of them, along with all AWS keys I had for programmatic access.

At this point, I still didn’t know how the attacker got in - but better safe than sorry.

I didn’t know this at this point, not now - our attacker doesn’t have access to my account anymore!

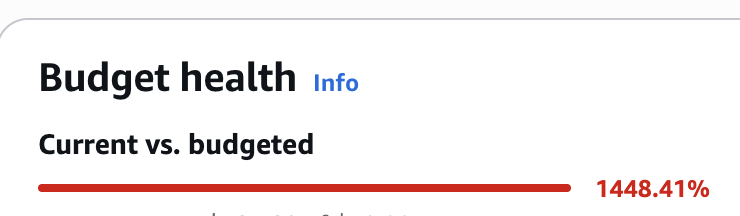

The Money Problem

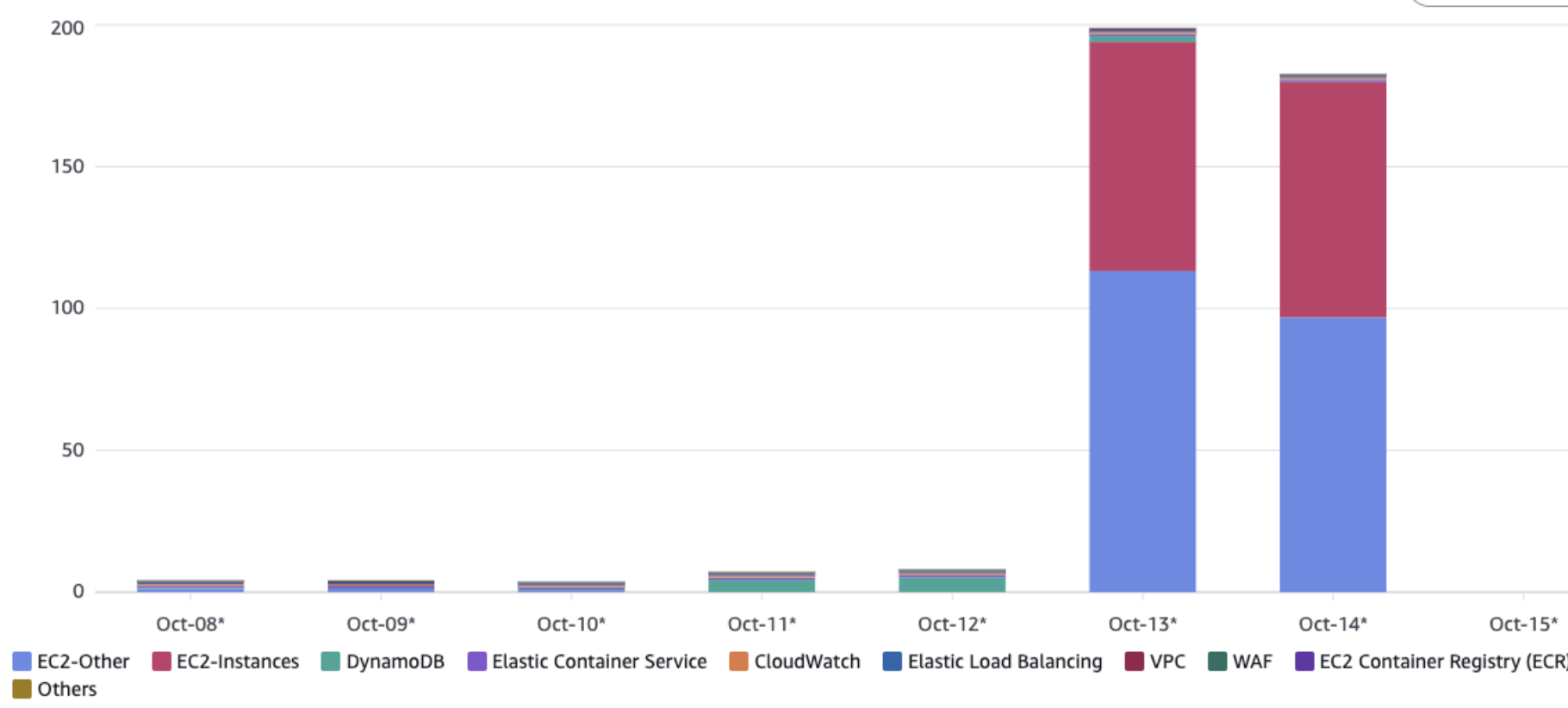

Next, I had to face the unpleasant truth: checking cost spike.

My personal account had been blown up - 400%+ increase in spending. Naturally, this wasn’t fun. 😅

Time to investigate:

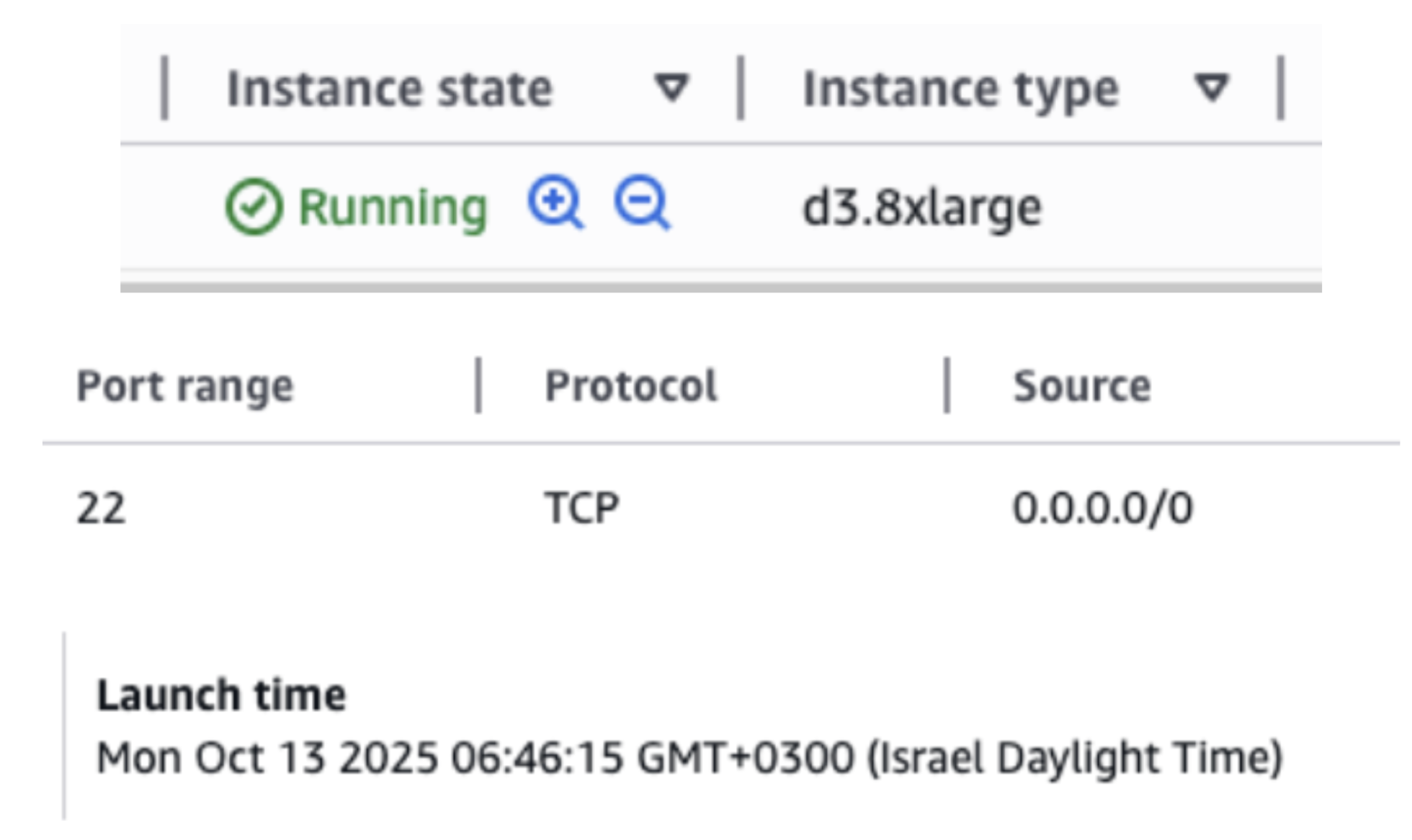

- EC2 usage - surprising, because I normally run serverless only.

- Filtering by region, it was clear: the attacker loved us-east-2 (I usually stick to us-east-1).

I terminated all the attacker’s EC2 instances, deleted their security groups and key pairs. I also removed the rogue DKIM records they had set up.

Locking Everything Down

After that:

- Re-checked IAM resources and SSH keys.

- Turned off my new website temporarily. I instructed a customer I manage to change their email passwords.

- Rotated all keys for OpenAI and GCP credentials.

By the end of this first response, I was feeling more confident.

The attacker’s access was gone. The immediate threat was contained. The account was back under my control.

Hour 3 - Incident analysis

I contacted AWS - and they responded surprisingly fast.

The first thing they wanted to confirm was that I still had access to the management account. Once I told them I had performed an initial incident response, they relaxed a bit and put my account into an “Under Attack” state. That mode restricts a lot of actions until AWS believes the account is safe - useful, but I didn’t stop there.

I continued my own analysis.

Step 1 - inventory everything

I used Resource Explorer to generate a full inventory of resources across the account. At a glance I didn’t see any unknown resources - a good sign, but not definitive.

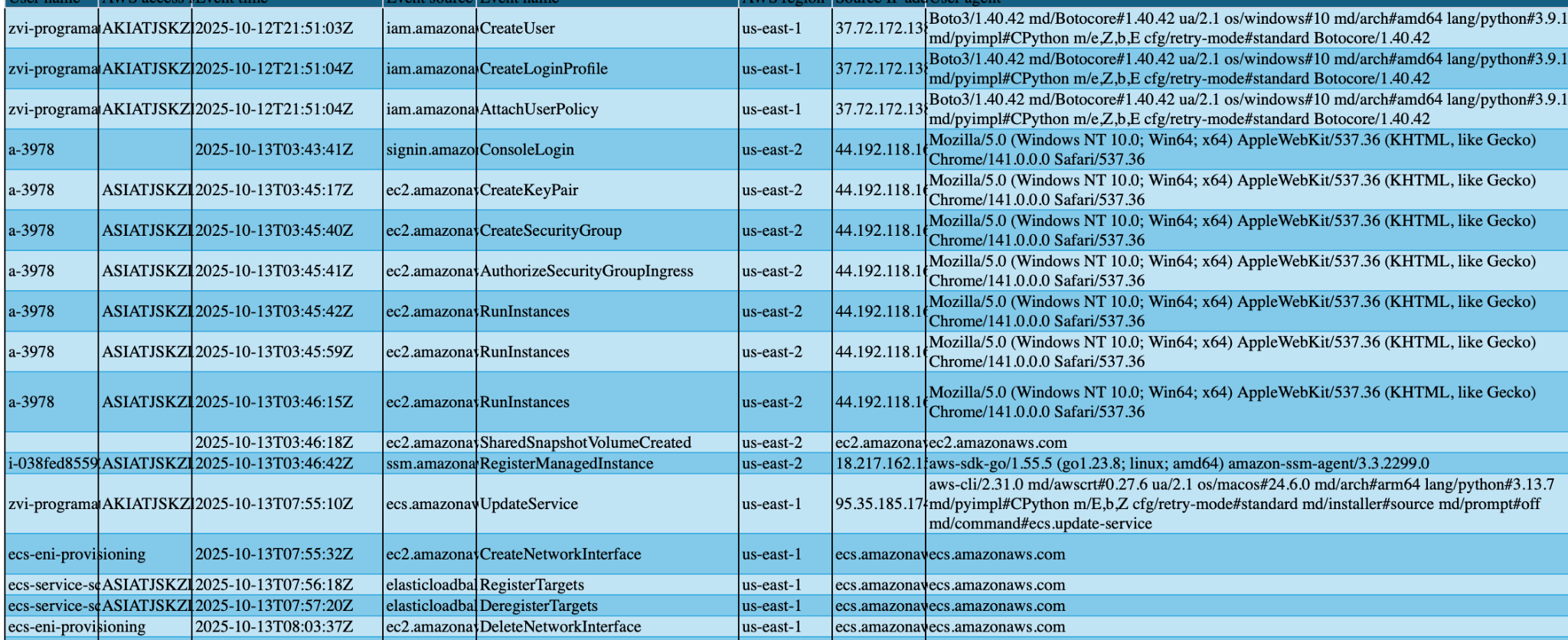

Step 2 - build a timeline from CloudTrail

I went region-by-region and exported CloudTrail event history to CSV for each region, then merged them into a single large CSV filtered by time. That let me replay every action, in order - exactly what you want in an IR.

Root cause (what I found)

The timeline made the root cause clear. On Oct 12 at ~21:00, the attacker used a programmatic AWS access key associated with my account to perform a sequence of actions:

- Created multiple IAM users to establish a backdoor.

- Launched EC2 instances (the likely source of the cost spike).

- Created an AWS Organization and spun up a new account (which they later closed).

- Kicked off Resource Explorer indexing to map what else they could access.

In short: the attacker operated with programmatic credentials already tied to my account (those are the keys I later deleted), and used them to set up persistence and run workloads.

Below I’ll lay out the full attack flow (step-by-step) including the attacker’s likely intent.

Hour 4 - finding the breach

Now comes the embarrassing part - let’s get it over with.🤦♂️

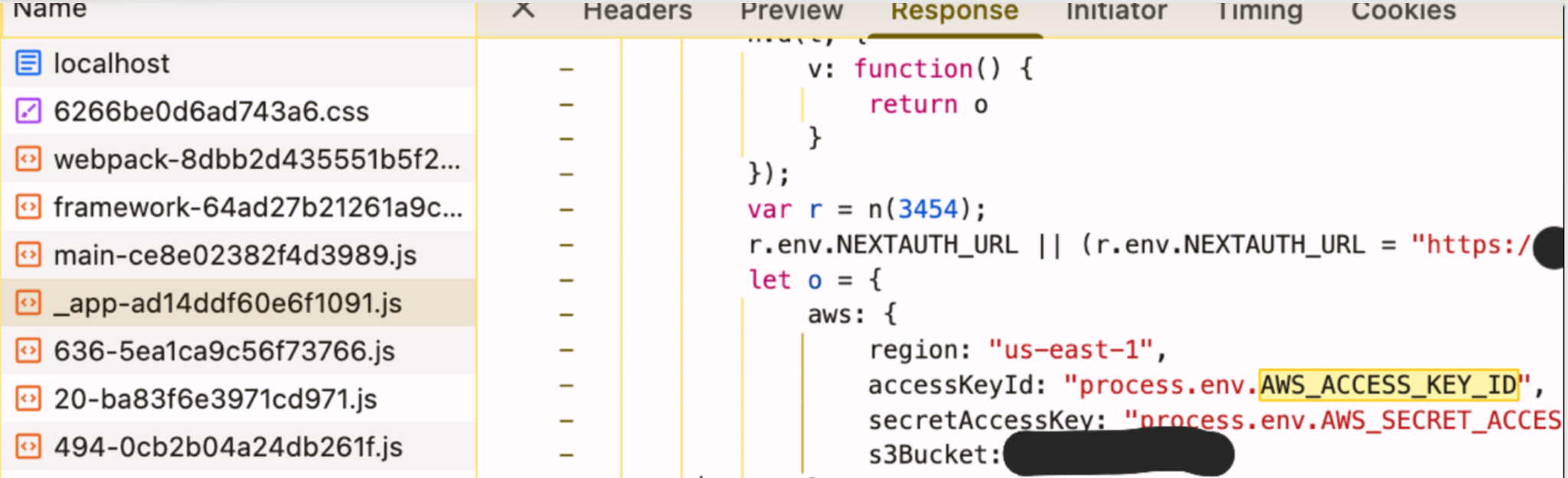

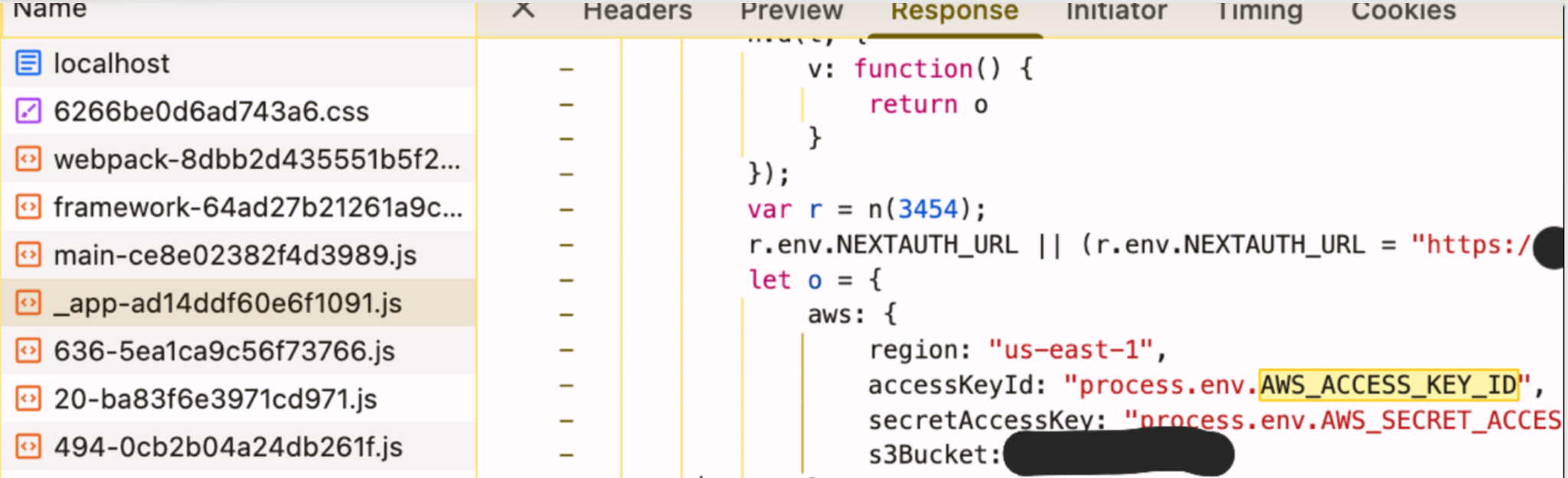

As I mentioned, I’d been working on my website (shhhh…). I made a product decision to implement the React UI with SSR (Server Side Rendering). That means the pages aren’t static - they’re hydrated from the server.

(Hydration = rendered on the backend as much as possible; for example, reacting to screen dimensions is impossible server-side, but fetching APIs works.)

The reason I chose SSR was performance: faster page loads make the site more attractive to search engine bots. I used NextJS for this.

Because it’s SSR, APIs can live in the same server codebase, and in fact, NextJS fully supports this:

“They must pack the API JS code to be served on the server as one js file, and the react components as a different js file”. Makes sense right? This is basic security control…

Except - I didn’t realize all my backend code was exposed to the UI. Yup, all of it.

Later, I integrated S3 for artifact storage and added an AWS key - in the code.

“I’ll move to secret manager right after I implement this important feature”

Fast forward - one day later, I got hacked.

Check the next section for what I could have done differently - spoiler: plenty of options.

But for now, let’s circle back: what was the attacker doing? Step by step, we’ll go through their actions and intentions.

Summary - Chronological attack flow

Crawl

I suspect the attacker started by crawling the internet, searching for secrets via regex or AI classification.

My domain and website were relatively new, so it’s likely he scanned newly created websites for easy targets.

Oct 12th, Evening - Attack Begins

The attacker decides to strike. He creates a new IAM user and assigns it admin access, detaching it from my own user as quickly as possible.

Then he waits six hours - for two reasons:

- If his user creation triggers security tools, he doesn’t want to reveal his hand.

- He waits for my nighttime, when I’m likely offline.

After the wait, he launches a huge EC2 instance, along with a KeyPair and his own Security Group to allow SSH access. Sadly, because I didn’t want to pay for EC2, I deleted the instance before thinking to snapshot it. So I’ll never know exactly what he planned - Ubuntu Server 24, plain setup. My guess? Crypto-mining.

The attacker continues using his EC2 for a few hours.

Oct 15th, Middle of the night - Establishing Persistence

By now, he’s been in my account for over 48 hours. He decides to establish persistence:

- Creates four additional IAM users.

- One of them is literally my own name.

Then the “fun” begins:

He launches his “Spam Army”, flooding my inbox with useless emails. The goal: increase the chance that I’d miss AWS’s important notifications.

He was betting that my email had already been validated by AWS and that I wouldn’t notice the verification email - which would allow him to create an Organization without me seeing it.

I, unfortunately, saw the email… and approved it. 😅

Five minutes later, he attempts the next move: creating the Organization. At this point, he might have thought his plan worked - that my email had been pre-validated and I hadn’t seen the notice. But I did see it, and my suspicion started rising.

He goes ahead anyway:

- Creates the Organization.

- Adds a new account within it.

- Then closes that account 90 seconds later.

I’m not entirely sure what his end goal was here. My guess: he tried something he hadn’t done before, attempting to take ownership of the Organization, but it didn’t succeed.

This back-and-forth - Spam Army, Organization creation, and quick account closure - continued through the night and early morning of Oct 15th.

Oct 15th, Morning - Frustration.

By morning, the attacker was clearly getting agitated. He’d poured a lot of effort into the Spam Army, but it wasn’t working the way he wanted.

His focus starts slipping. He tries whatever he can think of next, still assuming his army of emails is active. In reality, the flood is slowing down.

Switching tactics, he begins indexing all my resources using Resource Explorer, looking for anything useful.

That’s when he notices something interesting: I have two domains managed in Route53 with SES enabled.

SES (Simple Email Service) is AWS managed service designed to allow mass, automated email sending, when conencted to a custom domain using DKIM records, it allows sending emails from any origin from within the domain.

His thought process was clear:

“If I can send emails from these domains, I might be able to phish the admin and take over.”

Here’s why SES + Route53 + DKIM is important: If DKIM records are properly set between SES and Route53, anyone with SES access can send emails from any address in the domain. The attacker planned to leverage this to send phishing emails from my own domains.

What he didn’t know: AWS had already notified me about the DKIM changes.

He spent 30 minutes planning the phishing attempt, but by that point, I had already removed all his access to my account.

And just like that, his plan was stopped dead in its tracks.

Who is the attacker? What was their purpose?

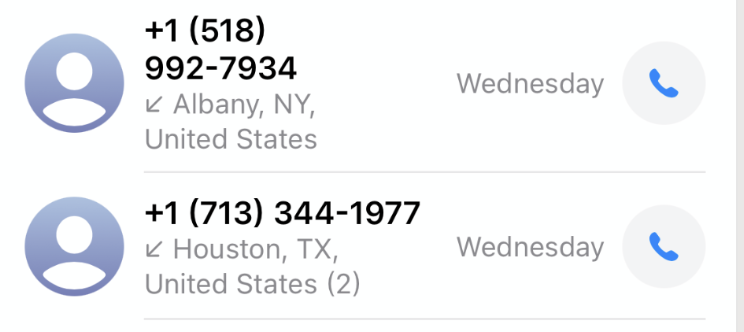

Here’s what I do know from the logs and what I tried to verify.

I never gained access to the attacker’s running EC2 - I had already terminated it before thinking to snapshot it, so there’s no instance image to inspect. The AMI and snapshot he used looked like stock, out-of-the-box Ubuntu 24.04 - nothing exotic or fingerprintable there. Only one custom empty file in the snapshot. That makes attribution harder.

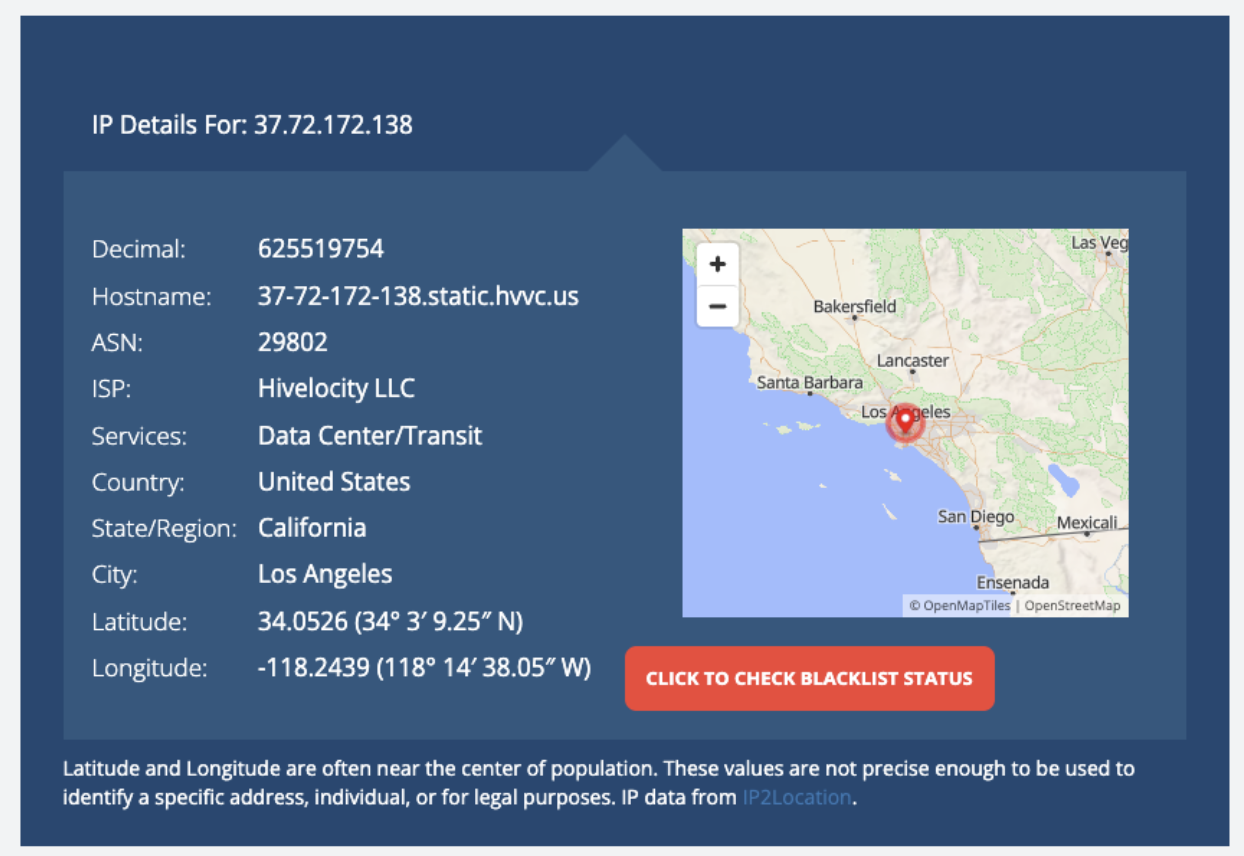

The very first recorded action from the attacker came from this IP: 37.72.172.138.

What I did next: I ran a passive lookup on the IP (geolocation/ASN/reverse DNS) and contacted the apparent provider - Highvelocity - with an abuse report. So far, no reply.

What this likely means (educated guesses, not certainties):

The attacker was opportunistic and financially motivated. The EC2 profile (large instance, keypair/SSH access) strongly suggests crypto-mining or running resource-heavy workloads to convert compute into profit.

The SES/DKIM activity and the attempt to send phishing from my own domains indicate a secondary or parallel goal: use my domain reputation to scale phishing attacks (and possibly harvest credentials).

The attacker probed broadly (resource indexing, spam flood) - a classic pattern for someone scanning for exposed secrets and then weaponizing any access they find.

Limits of what I can prove publicly:

- Without the EC2 instance snapshot or additional ISP cooperation, I can’t confidently attribute the attack to a particular actor or group.

- Stock AMIs and transient infrastructure are deliberately chosen by attackers to avoid easy fingerprinting.

I reported everything to the provider and filed an abuse complaint. If they respond, I’ll share updates. For now, attribution remains inconclusive - but the behavior and artifacts point to financially motivated abuse (mining + phishing), rather than a targeted espionage campaign.

AWS helped a lot cleansing my account, but wasn’t helpful either when it comes to identifying the attacker.

Have any idea how to investigate further? contact me!

Insights

How to avoid the leak in the first place

- Don’t say “it won’t happen to me” - it can, and it will.

- Manage secrets in Secret Manager or HSM instead of hardcoding keys.

- Understand your frameworks and tech - know what your SSR or serverless code actually exposes.

How to minimize the risk in case of a leak

- Add basic security measures like GuardDuty - cheap insurance.

- Don’t use the root user for day-to-day operations.

- Rotate secrets regularly so a leaked key is short-lived.

- Fragment secret access - never give one key unlimited power.

What to do in case of an incident

- Trust your hunch - if it feels wrong, it probably is.

- Respond first, research later - containment comes before analysis.

- Don’t blindly trust ChatGPT for incident validation.

- Contact AWS immediately - they have tools and processes for real-time containment.

- Don’t leave any stone unturned - check every IAM user, key, instance, and log.

Epilogue

Looking back, this incident was humbling - and, honestly, a little embarrassing. I’m a cloud architect and a former vulnerability hunter, yet a single overlooked key in my personal account gave someone enough access to poke around, spin up EC2 instances, and almost misuse my domains. It’s easy to think, “It won’t happen to me,” but attackers don’t discriminate, and even small personal projects can be exploited.

What saved me was speed, vigilance, and knowing where to look, but the experience reinforced a simple truth: security is not a checkbox, it’s a mindset.

Even after regaining control, the lessons stayed clear. Secrets must be managed properly, privileges limited, alerts monitored, and unusual activity treated as critical. Respond fast, rotate keys, understand your frameworks, and never underestimate logs and notifications. At the end of the day, I got lucky - nothing critical was lost - but the takeaway is clear: assume every account can be targeted, and design your security accordingly. Even experts make mistakes; owning them, fixing them, and sharing your story is how we all get safer.